The intersection of physics-inspired artificial intelligence and data center cooling represents one of the most promising frontiers in sustainable computing. As global data consumption skyrockets, traditional cooling methods struggle to keep up with the heat generated by densely packed server racks. Enter fluid dynamics optimization—a field where AI, guided by the fundamental principles of fluid mechanics, is revolutionizing how we manage thermal loads in data centers.

The Thermodynamic Challenge of Modern Data Centers

Data centers now account for nearly 1% of global electricity consumption, with cooling systems representing up to 40% of that energy draw. Conventional approaches—from computer room air conditioning (CRAC) units to raised floor designs—rely on brute-force methods that often cool spaces rather than targeted heat sources. This inefficiency becomes glaringly apparent in hyperscale facilities housing hundreds of thousands of servers, where temperature differentials of just a few degrees can mean millions in annual energy savings or losses.

The physics of heat transfer in these environments presents extraordinary complexity. Turbulent airflow patterns interact with server geometries in nonlinear ways, while local hotspots emerge unpredictably as workloads shift across server clusters. Traditional computational fluid dynamics (CFD) simulations, though valuable, become computationally prohibitive when attempting real-time optimization across entire facilities.

Fluid Intelligence: When AI Learns the Navier-Stokes Equations

Recent breakthroughs in physics-informed neural networks (PINNs) have created AI models that inherently understand fluid behavior. Unlike black-box machine learning approaches, these systems encode the Navier-Stokes equations—the fundamental laws governing fluid motion—directly into their architecture. The result is an AI that predicts airflow and heat transfer with the accuracy of high-fidelity simulations but at a fraction of the computational cost.

At the Massachusetts Institute of Technology, researchers demonstrated a neural network that could optimize cooling strategies in a simulated data center 1,000 times faster than traditional CFD methods. The AI didn't just mimic fluid behavior—it discovered novel vortex patterns that enhanced natural convection around server racks, reducing cooling energy by 22% compared to best-practice layouts.

The Emergence of Dynamic Cooling Topologies

What makes physics-inspired AI truly transformative is its ability to continuously adapt to changing conditions. Google's DeepMind collaboration with data center operators revealed that AI could dynamically adjust cooling parameters—from fan speeds to vent openings—in response to real-time workload fluctuations. Their system achieved a 40% reduction in cooling energy while maintaining safer operating temperatures than human-managed systems.

More radical implementations are exploring morphing baffles and adaptive ductwork that physically reshape cooling pathways. At the University of Cambridge, engineers combined shape-memory alloys with AI-controlled fluid dynamics to create "living" cooling systems that evolve their geometry based on thermal maps. Early prototypes show 30% better heat transfer efficiency compared to static designs.

Liquid Cooling Meets Machine Learning

As direct-to-chip liquid cooling gains traction, AI optimization becomes even more crucial. The interplay between microchannel flows, pump pressures, and thermal gradients creates a multidimensional optimization problem perfectly suited for physics-aware machine learning. Startups like JetCool and CoolIT now employ reinforcement learning algorithms that tune coolant flow rates at the individual server level, achieving unprecedented precision in thermal management.

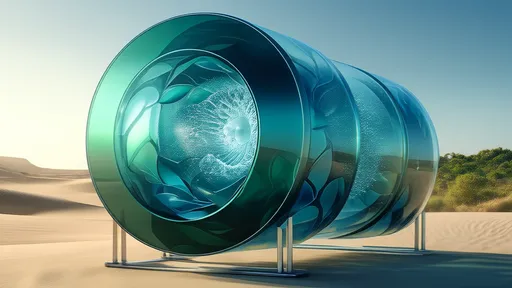

Microsoft's Project Natick—the underwater data center experiment—provided striking validation of these approaches. Their AI-managed marine cooling system leveraged ocean current predictions to adjust heat exchanger operations, maintaining optimal temperatures despite varying tidal flows. The system's success has inspired similar bio-inspired cooling concepts for land-based facilities.

The Road to Autonomous Thermal Management

The next evolution involves fully autonomous cooling systems that self-diagnose and reconfigure. Researchers at ETH Zurich recently demonstrated a neural network that could predict impending thermal anomalies hours before they occurred by analyzing subtle airflow perturbations. When integrated with robotic duct systems, such AI could theoretically prevent overheating events before they impact server performance.

As quantum computing and high-performance computing push power densities to new extremes, the marriage of fluid dynamics and artificial intelligence may well determine the sustainable future of digital infrastructure. The physics that once constrained our cooling capabilities now, through intelligent interpretation, provides the key to overcoming them.

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025

By /Jul 10, 2025